We scanned the feeds so you don’t have to. The noise was high today, but the underlying signals were unusually clear.

📱 SCROLL (today online madness)

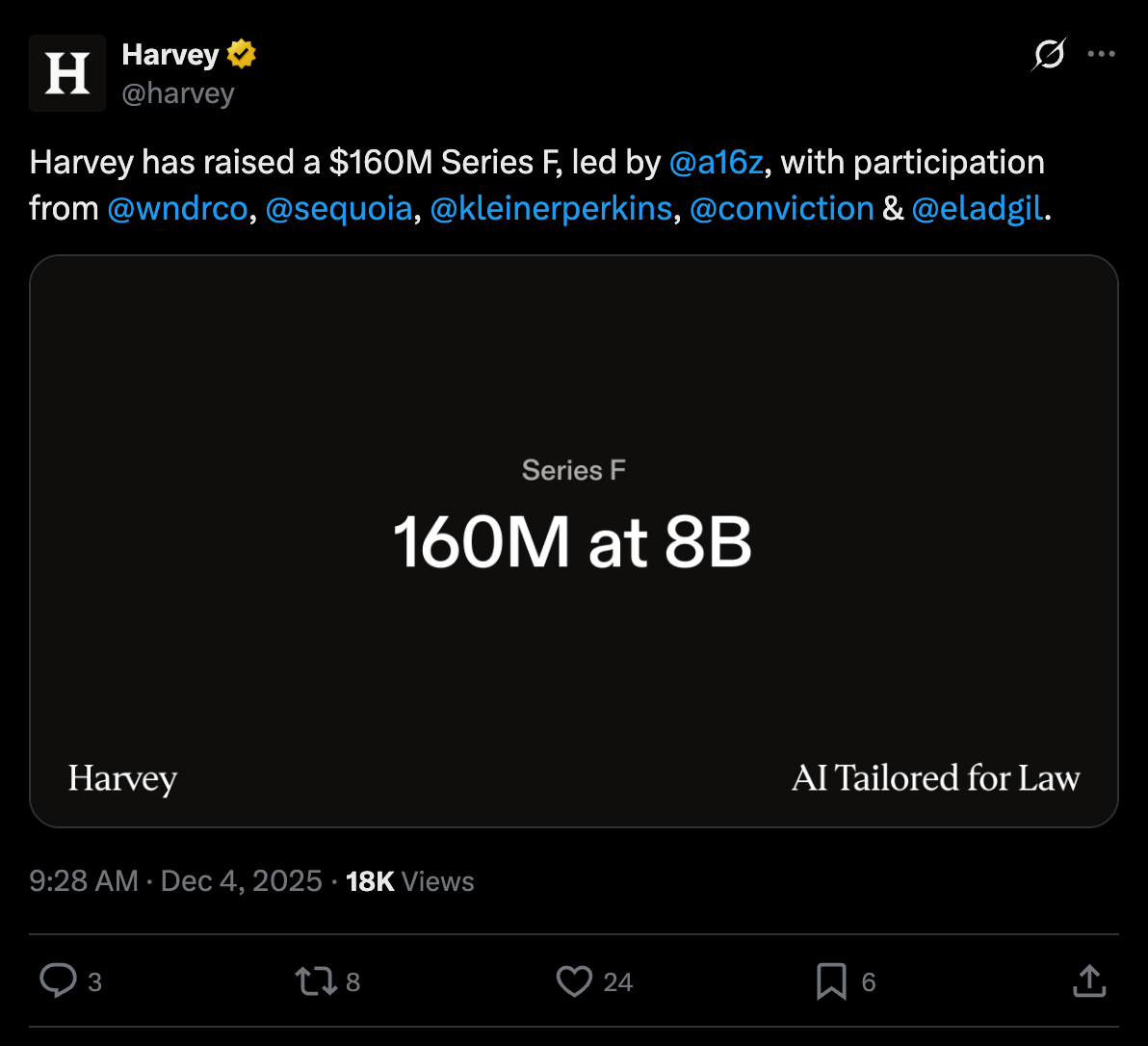

Harvey raises $160M at an $8B valuation, led by a16z

Harvey is solidifying itself as the default AI infrastructure layer for enterprise legal teams.

Why it matters: Regulated, high-liability sectors are moving from pilot → production. This is the clearest inflection point yet for real enterprise AI spend. Harvey is also completing its first tender offer - another sign of durable confidence.

Source: Harvey (X)

Trump administration is moving its tech agenda from AI → robotics

Federal alignment signals robotics is moving from niche automation to a national productivity mandate.

Why it matters: Policy direction de-risks capex, accelerates deployment, and creates a multi-decade investable surface across autonomy, hardware, and industrial AI.

Source: Politico

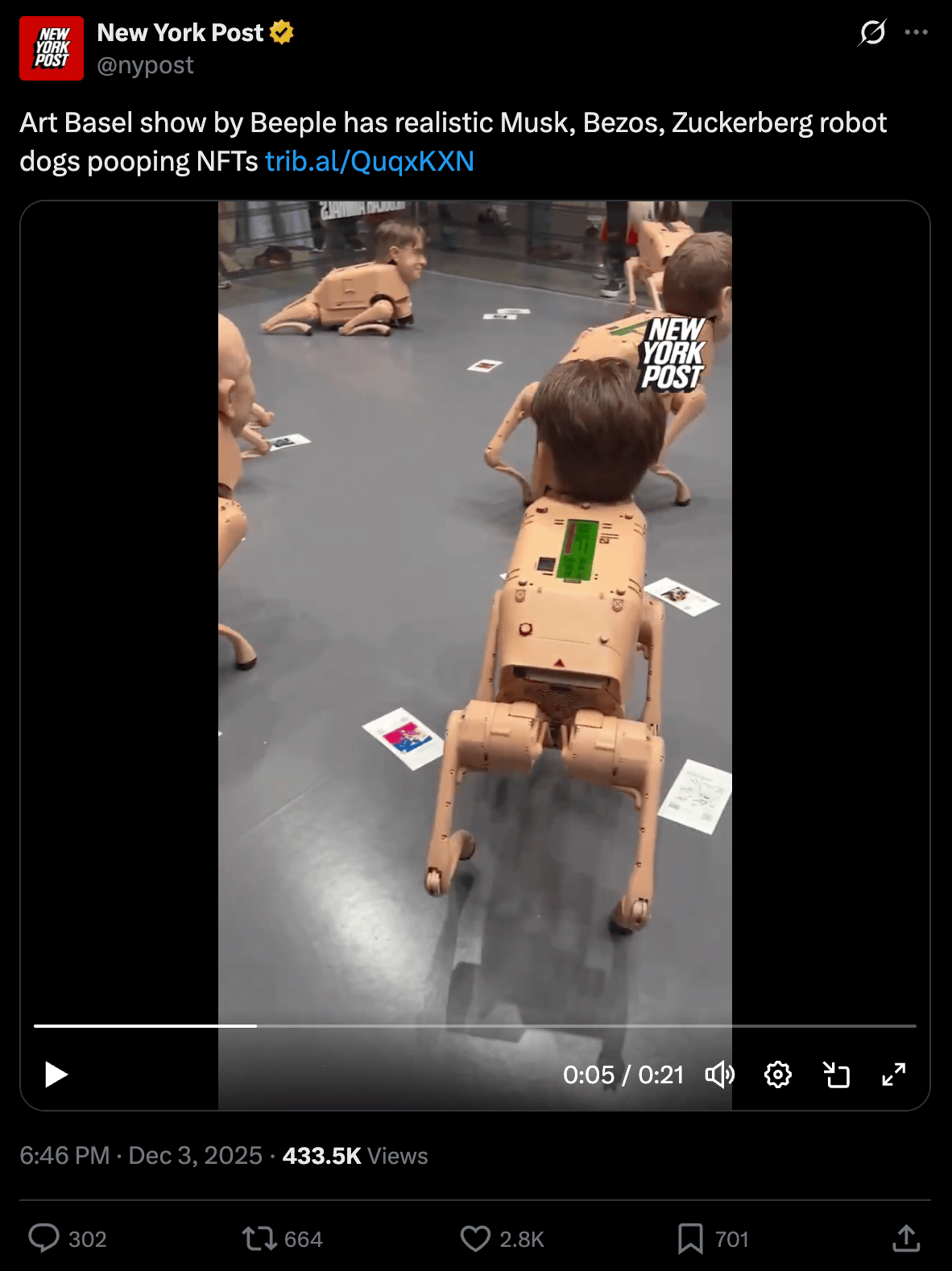

Art Basel show by Beeple has realistic Musk, Bezos, Zuckerberg robot dogs pooping NFTs

Tech have crossed into mainstream cultural mythology - a marker of narrative saturation

Why it matters: When tech becomes culture, it shapes consumer perception, regulation sentiment, and the memetic channels that drive adoption cycles.

Source: New York Post (X)

⚗️ FOR YOU, FILTERED

Today’s Filter: What is Continual Learning?

TL;DR:

Continual learning lets AI models update in real time instead of freezing after one massive training run.

The Problem:

LLMs are static. They can’t ingest new data, forget easily, and require expensive end-to-end retraining.

The Upgrade:

Smaller models with memory that update themselves without forgetting what they know.

Think of it like this:

Rebuilding your entire codebase for every change (static LLMs) vs. pushing incremental commits continuously (continual learning).

The Result:

Faster adaptation, cheaper training, smaller datasets, and models that improve continuously instead of resetting every cycle.

Why should you care?

This is the unlock for real-time enterprise AI; lower compute costs, shorter development cycles and AI systems that stay current as the world changes.

📡 SIGNALS

Theory’s done. Let’s see who’s actually executing.

What is it? A habit app with an AI coach designed to personalize daily behavior change.

Why it matters? Moves wellness from passive tracking → active intervention driven by personal context.

What is it? A unified interface for running background coding agents.

Why it matters? Early infrastructure for ambient developer agents — always-on automation for engineering teams.

What is it? A self-healing software layer that detects failures and automatically recovers systems.

Why it matters? Reliability becomes an AI-native layer — reducing downtime, on-call load, and debugging overhead.

Your feed was unhinged.

Your insights don’t have to be.

Forward your next newsletter to Muse and let it stitch everything together while you go touch grass.